Elasticsearch: How we speed up news feeds and user walls display

In my previous post, I talked about how to speed up reads and writes coupling Redis with Neo4J. Now I want to share with you how it’s possible to unload your server and use Elasticsearch to speed up news feed and user wall.

1/ Show me your news feed

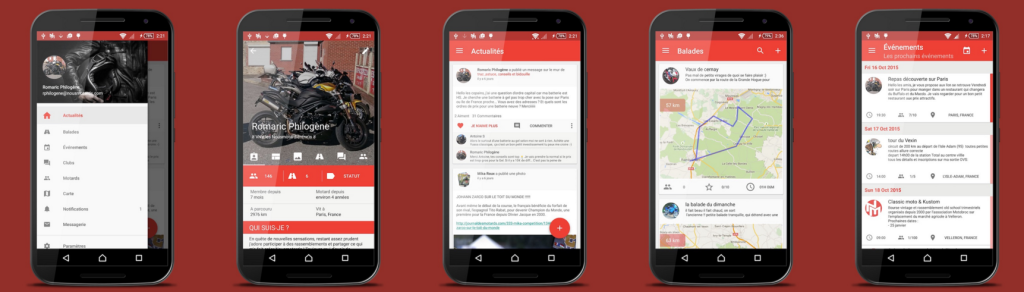

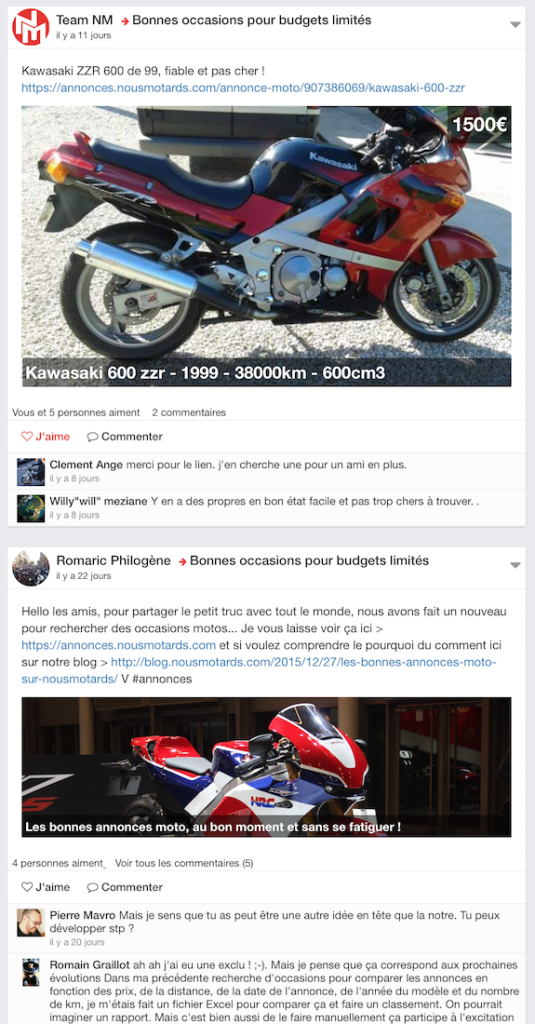

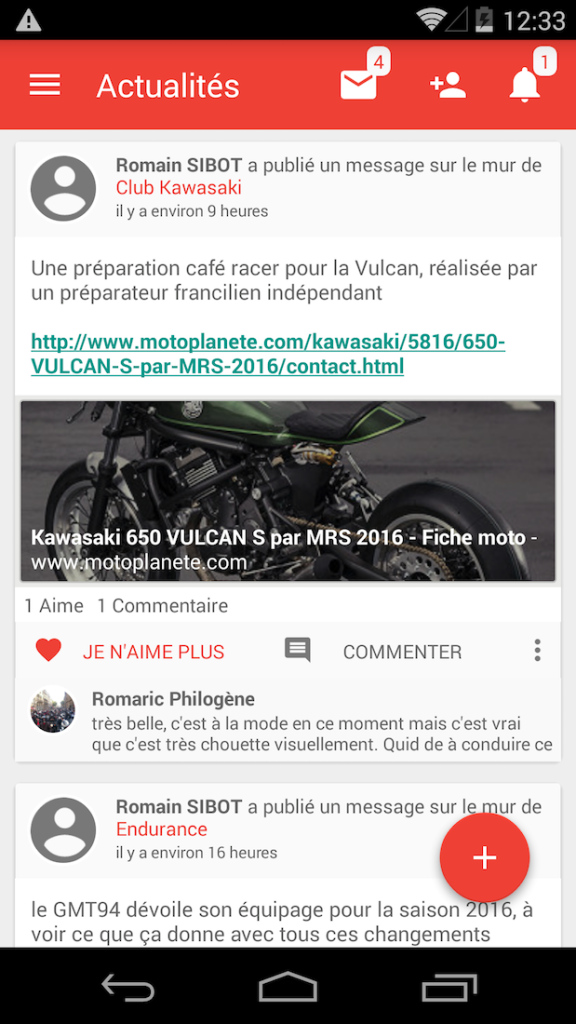

A news feed is the main page of traditional social networks. Its main goal is to show you all recents updates from your friends and your interests. Nousmotards news feed is like any other social networks (facebook, linkedin..), the user’s feeds are available from their web browser and their NM mobile application and it looks like this.

As a user, you want to get the more relevant things and the most updated posts from your friends. And while you are scrolling you want to have the best experience possible. Which means, no glitch/freeze from the UI, no latency while loading images and bunch of news.

2/ First attempt

In our first approach we were using directly Neo4J to read data and display them to the users. Direct access to the database is expensive, 40 concurrent accesses and your server will become so slow than nobody will ever come back.

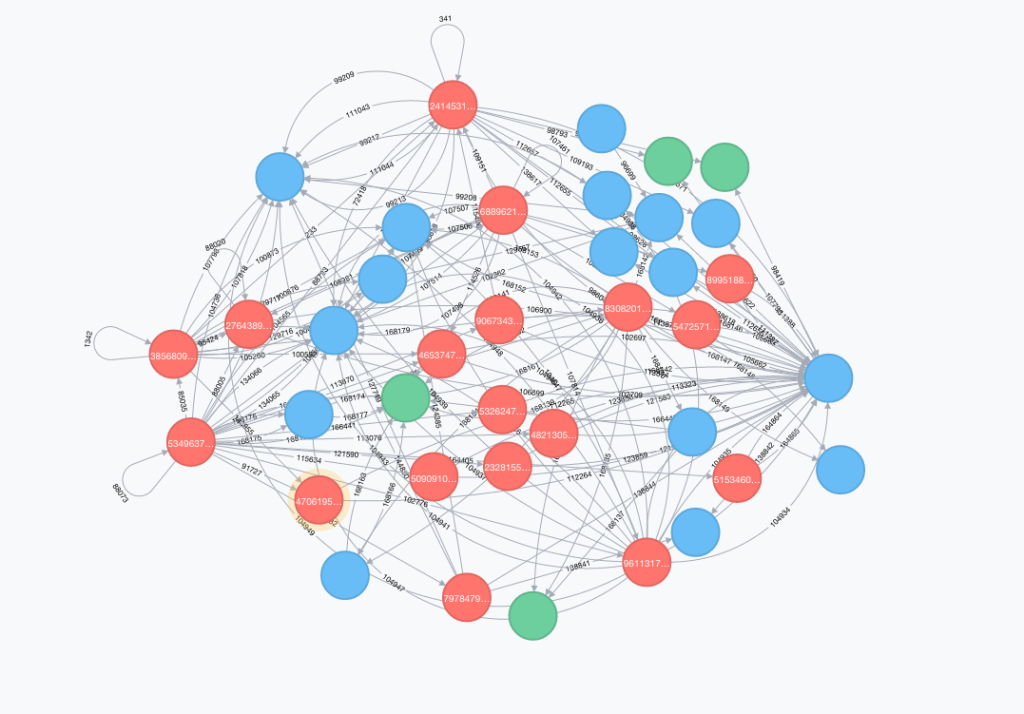

I let you imagine the complexity of this, and the effort that the database has to do to respond to the 40 concurrent users, enrich them and serialize them all into JSON before sending them to the requester. (note: red dots are users)

Enrichment is the process of setting some properties depending on who made the request. For instance: Romaric has like a post from Pierre, Romaric request the server to have his feed page, he’s retrieving the post that he liked in the same state. Pierre see that his post has been liked by Romaric.

Enrichment processing is very expensive in time, and must be done for each document (10 documents per page) that you want to send to the requester.

4 requesters, 4 read queries in database, 40 enrichments which results to 40 read requests in database more.. server took at best 4 seconds to respond to each of them. Very bad for user experience.. More requesters you have, and more the service take time to respond.. latency ~= O(n^2) where n is the number of simultaneous requesters..

3/ Elasticsearch to the rescue

Elasticsearch is now a very popular documents store solution very used by OPS and developers. It’s commonly used to store logs, search inside them, do sophisticated analytics.. Access times are very good and the product own large community to make it evolving into the right direction.

In Nousmotards we are using it for log analytics, monitoring and above all as feeds and walls cache solution !

Instead of directly using Neo4J to ship documents to the user, we have placed Elasticsearch between Neo4j and the “core app” to retrieve them, then enriching them from Redis before replying to the user_._

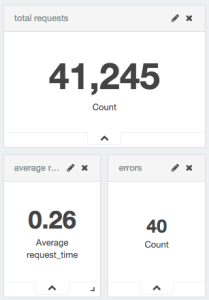

Neo4J disappear ? No it’s just not used anymore on read requests. Now with 40 concurrent requests we are around 200 and 300ms to reply, that’s huge difference ! Neo4J is no more bother by users who get their last updates and can process write requests smoothly. (stats from yesterday, 41245 req, 260ms average request time)

4/ To conclude

Elasticsearch is our life saver, we are using it for more and more things at Nousmotards. In the other hand, it is not a database, you should not use it as your primary datastore ! Corrupt indices may happen and you should be able to reload all your data from scratch. If you know that, you can start to use it at its full potential and with no fear to break things 😉

sources:

- News feed with Elasticsearch @Viadeo

- Activity Stream

- News feed performance on iOS @Facebook

- News feed performance on Android @Facebook

To get more informations on nousmotards: blog.nousmotards.com

Join us ! :-)